Today, Linux hardware vendor TUXEDO Computers launched a new variant of the TUXEDO InfinityBook Max 16 Gen10 Linux laptop with an AMD Ryzen AI 9 CPU instead of an Intel CPU.

Woof, The Marathon Battle Pass Is Bad

At least the reward passes don’t expire, but that’s about the only good thing I have to say about these things

Netflix’s version of Overcooked lets you play as Huntr/x

Netflix’s library of streamable party games is expanding today with a custom version of Overcooked! All You Can Eat. Netflix launched its cloud gaming program with games like Lego Party and Tetris Time Warp, but Overcooked feels a bit unique because it features a roster of Netflix-affiliated characters from KPop Demon Hunters and Stranger Things.

For the uninitiated, Overcooked plays like a more manic version of Diner Dash, where teams attempt to prepare food together in increasingly elaborate kitchens filled with obstacles. The original version of Overcooked! All You Can Eat was released in 2020, and includes DLC and stages from previous versions of the game. Netflix’s version bundles in the same content, and “10 Netflix celebrity chefs” including “Dustin, Eleven, Lucas, and the Demogorgon from Stranger Things,” and “half-dozen faces from KPop Demon Hunters,” like “Mira, Rumi, Zoey, Jinu, Derpy and Sussie.” Like Netflix’s other streaming games, playing Overcooked also requires you to use a connected smartphone as a controller.

Offering a growing library of streaming games is part of Netflix’s new strategy under Alan Tascan, a former executive from Epic Games. Tascan took over as Netflix’s President of Games in 2024, and appeared to start revamping the company’s plans not long after, cancelling the release of several mobile games and reportedly shutting down its AAA game studio. Netflix is also continuing to adapt video games into content for its platform. For example, A24 is reportedly developing a game show based on Overcooked for the streaming service.

This article originally appeared on Engadget at https://www.engadget.com/gaming/netflixs-version-of-overcooked-lets-you-play-as-huntrx-212515187.html?src=rss

Handheld Revolver Style Model Rocket Launcher

Seen here looking like a great candidate for being the last thing a person ever sees, this is a video of Youtuber Current Concept designing, 3D printing, and testing a 3-barrel revolver that shoots model rockets. Now THIS — this is the exact sort of dangerous fun I aspire to get into on weekends. But do I? Noooooo, by the time I’ve finished the list of chores my girlfriend made it’s time to go to work Monday morning with no sleep. I can’t go on like this. “You mean with the imaginary girlfriend?” At least she has three boobs.

Valve’s Steam Machine Console Is No Longer Guaranteed To Ship In 2026 Due To Memory Crisis

Plans around the new hardware are devolving quickly

Major Fortnite Leaker Actually Worked For Epic Games

Epic is suing the leaker known as AdiraFN, who worked at the company as an associate producer

Pokémon Pokopia Review In Progress: A Genre That Usually Loses Me Is Winning Me Over

Life sims like Animal Crossing usually overwhelm me, but Pokopia has something others don’t

CEO Behind Kickstarter MMO That Ran Out Of Money Breaks His Silence And Claims First Legal Victory

Ashes of Creation rapidly imploded and now the fight about why is going public

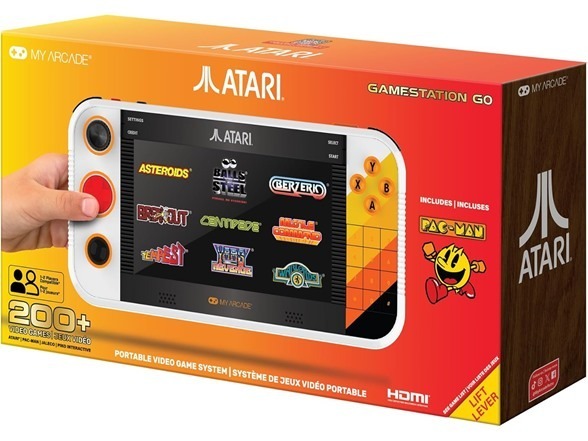

This Handheld Atari Console Comes With 200 Old-School Games, and It’s $50 Off

We may earn a commission from links on this page. Deal pricing and availability subject to change after time of publication.

If old-school Atari games like Pac-Man, Centipede, and Asteroids unlock a deep childhood nostalgia for you, check out the My Arcade Atari Gamestation Go. This handheld gaming device is packed with 200 games and is currently on Woot for an all-time low price of $129.99 (originally $179.99).

CNET praised the devices “crazy array of controller options,” though it cautioned that some games you might remember fondly have actually aged quite poorly.

While there aren’t detachable controllers, the 7-inch screen is bigger than the original Nintendo Switch, and it has the classic D-pad and shoulder buttons as well as a rotating dial, a physical number pad, and a trackball wheel. It comes with a sturdy kickstand, an HDMI port, three USB-C ports, a headphone jack, and a micro-SD slot for side-loading games. Although you can update the system via built-in wifi, you can’t buy or download additional titles on an app store, limiting you to the pre-loaded games.

While nostalgia is the main draw of this rechargeable console, keep in mind that performance and pacing might feel different if you’re used to modern games on contemporary consoles, reminding us that not all early games have aged well. That said, there are still a ton of options to choose from that will likely include your favorites, making the My Arcade Atari Gamestation Go worth it for classic gaming enthusiasts, especially at $50 off.

Samsung Galaxy S26 Ultra, Unlocked Android Smartphone + $200 Gift Card, 512GB, Privacy Display, Galaxy AI, AI Camera, Super Fast Charging 3.0, Durable Battery, 2026, US 1 Year Warranty, Black

—

$1,299.99

(List Price $1,499.99)

Samsung Galaxy Buds 4 AI Noise Cancelling Wireless Earbuds + $20 Amazon Gift Card

—

$179.99

(List Price $199.99)

Mozilla Is Working On a Big Firefox Redesign

darwinmac writes: Mozilla is working on a huge redesign for its Firefox browser, codenamed “Nova,” which will bring pastel gradients, a refreshed new tab page, floating “island” UI elements, and more. “From the mockups, it appears Mozilla took some inspiration from Googles Material You (or at least, the dynamic color extraction part of it) because the browser color accent appears influenced by the wallpaper setting,” reports Neowin. “Choosing a mint-green desktop background automatically shifts the top navigation bars to match that exact shade.”

Mozilla has a habit of redesigning Firefox every few years. Before “Nova,” there was the “Proton” redesign in 2021, the “Photon” redesign in 2017, and the “Australis” redesign in 2014. Nova is still in early development, so it might take a year or two before it appears in an official stable Firefox release. Neowin adds: “Not every redesign project ends well for Mozilla, though. You might remember 2012’s Firefox Metro, an ambitious attempt to build a custom browser for Windows 8s touch-first interface. The team built it to operate both as a traditional desktop application and as a touch-optimized Metro app. The whole thing was scrapped in 2014 after two years in development due to a dismally low user adoption rate (a preview version of the software had been released a year earlier on the Aurora channel).”

Read more of this story at Slashdot.

Google’s new command line tool can plug OpenClaw into your Workspace data

The command line is hot again. For some people, command lines were never not hot, of course, but it’s becoming more common now in the age of AI. Google launched a Gemini command line tool last year, and now it has a new AI-centric command line option for cloud products. The new Google Workspace CLI bundles the company’s existing cloud APIs into a package that makes it easy to integrate with a variety of AI tools, including OpenClaw. How do you know this setup won’t blow up and delete all your data? That’s the fun part—you don’t.

There are some important caveats with the Workspace tool. While this new GitHub project is from Google, it’s “not an officially supported Google product.” So you’re on your own if you choose to use it. The company notes that functionality may change dramatically as Google Workspace CLI continues to evolve, and that could break workflows you’ve created in the meantime.

For people who are interested in tinkering with AI automations and don’t mind the inherent risks, Google Workspace CLI has a lot to offer even at this early stage. It includes the APIs for every Workspace product, including Gmail, Drive, and Calendar. It’s designed for use by humans and AI agents, but like everything else Google does now, there’s a clear emphasis on AI.

GTK 4.22 Released With Improved SVG Support, Reduced Motion Option

Ahead of the GNOME 50 desktop release coming up in less than two weeks, GTK 4.22 is out today as the newest stable version of the GTK4 toolkit…

Feds take notice of iOS vulnerabilities exploited under mysterious circumstances

The Cybersecurity and Infrastructure Security Agency has ordered federal agencies to patch three critical iOS vulnerabilities that were exploited over a 10-month span in hacking campaigns conducted by three distinct groups.

The hacking campaigns came to light on Thursday in a report published by Google. All three campaigns used Coruna, the name of an advanced hacking kit that amassed 23 separate iOS exploits into five potent exploit chains. While some of the vulnerabilities had been exploited as zero-days in earlier, unrelated campaigns, all had been patched by the time Google observed them being exploited by Coruna. When used against older iOS versions, the kit nonetheless posed a formidable threat given the high caliber of the exploit code and the wide range of capabilities.

The case of the promiscuous 2nd-hand zero-days

“The core technical value of this exploit kit lies in its comprehensive collection of iOS exploits,” Google researchers wrote. “The exploits feature extensive documentation, including docstrings and comments authored in native English. The most advanced ones are using non-public exploitation techniques and mitigation bypasses.”

Final Fantasy VII Remake Director Teases A Bunch Of Big Stuff About Part 3

Players have high expectations for the RPG remake trilogy going into the finale

Nintendo is suing the US government over Trump’s tariffs

Nintendo of America is suing the US government, including the Department of Treasury, Department of Homeland Security and US Customs & Border Protection, over its tariff policy, Aftermath reports. The video game giant already raised prices on the Nintendo Switch in August 2025 in response to “market conditions” but has so far left the price of the newer Switch 2 console unchanged.

Nintendo’s lawsuit, filed in the US Court of International Trade, cites a Supreme Court ruling from February that confirmed lower courts’ opinions that the Trump administration’s global tariffs were illegal. Nintendo’s lawyers claim that the video game company has been “substantially harmed by the unlawful of execution and imposition” of “unauthorized Executive Orders” and the fees Nintendo has already paid to import products into the country. In response, the company is seeking a “prompt refund, with interest” of any fees that its paid.

Engadget has reached out to Nintendo of America about its lawsuit and will update this article if we hear back.

While taxes and other trade policies are supposed to be set by Congress, President Donald Trump implemented a collection of global tariffs over the course of his first year in office using executive orders and the International Emergency Economic Powers Act (IEEPA), a law that gives the President extended powers over trade during a global emergency. The Trump administration has positioned the tariffs as a way to punish enemies and bargain with trade partners, but many companies have passed the increased price of importing goods onto customers.

Developing…

This article originally appeared on Engadget at https://www.engadget.com/gaming/nintendo/nintendo-is-suing-the-us-government-over-trumps-tariffs-191849003.html?src=rss

Samsung Galaxy S26 Ultra Performance Preview: Early Benchmarks Revealed

Samsung officially announced its latest Galaxy S26 family of devices last week, powered by an exclusive version of Qualcomm’s Snapdragon 8 Elite Gen 5 mobile platform. The lineup consists of the flagship Galaxy S26 Ultra ($1299), Galaxy S26+ ($1099), and base model Galaxy S26 ($899)m all of which receive some incremental updates. In…

New ASUS, Dell & OneXPlayer Hardware Support In Linux 7.0-rc3

A pull request sent out today and already merged to Linux Git ahead of Sunday’s Linux 7.0-rc3 has some new hardware driver support additions for the likes of ASUS, HP, Dell, and OneXPlayer…

Ayaneo Refreshes Pocket Air Mini Budget Handheld With A Limited Edition Release

Upon its release, Ayaneo’s Pocket Air Mini received praise for its build quality, strong Nintendo GameCube emulation performance, and entry-level pricing under $100. While the price of the device has increased over time, it’s still a solid budget handheld. Now, Ayaneo is celebrating with a refresh by releasing a new model in collaboration

Linux From Scratch 13.0 Released as First Systemd-Only Version

The Linux From Scratch project has released LFS 13.0 and BLFS 13.0, featuring updated packages, the 6.18.10 kernel, and a systemd-only build.

Asteroid defense mission shifted the orbit of more than its target

On September 26, 2022, NASA’s Double Asteroid Redirection Test (DART) spacecraft crashed into a binary asteroid system. By intentionally ramming a probe into the 160-meter-wide moonlet named Dimorphos, the smaller of the two asteroids, humanity demonstrated that the kinetic impact method of planetary defense actually works. The immediate result was that Dimorphos’ orbital period around Didymos, its larger parent body, was slashed by 33 minutes.

Of course, altering a moonlet’s local orbit doesn’t seem like enough to safeguard Earth from civilization-ending impacts. But now, as long-term observational data has come in, it seems we accomplished more than that. DART actually changed the trajectory of the entire Didymos binary system, altering its orbit around the Sun.

Tracking space rocks

Measuring the orbital shift of a 780-meter-wide primary asteroid and its moonlet from millions of miles away isn’t trivial. When DART slammed into Dimorphos, it didn’t knock the binary system wildly off its trajectory around the Sun. The change in the system’s heliocentric trajectory was expected to be small, a minuscule nudge that would become apparent only after months or years of continuous observation. By analyzing enough painstakingly gathered data, a global team of researchers led by Rahil Makadia at the University of Illinois Urbana-Champaign has now determined the consequences of the DART impact.